Titan X Gpu Deep Learning

Learn more about Exxact deep learning workstations starting at $3,700.

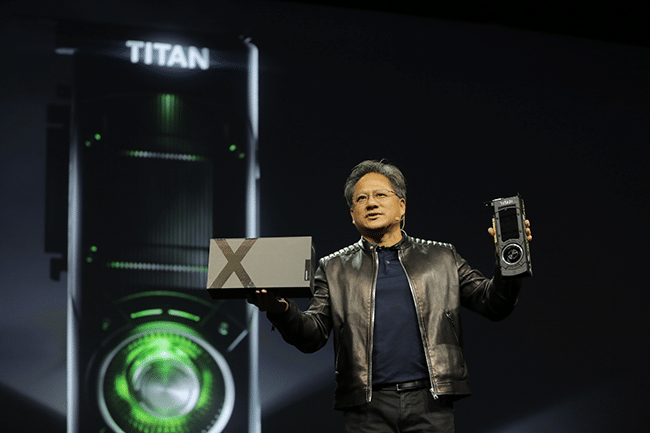

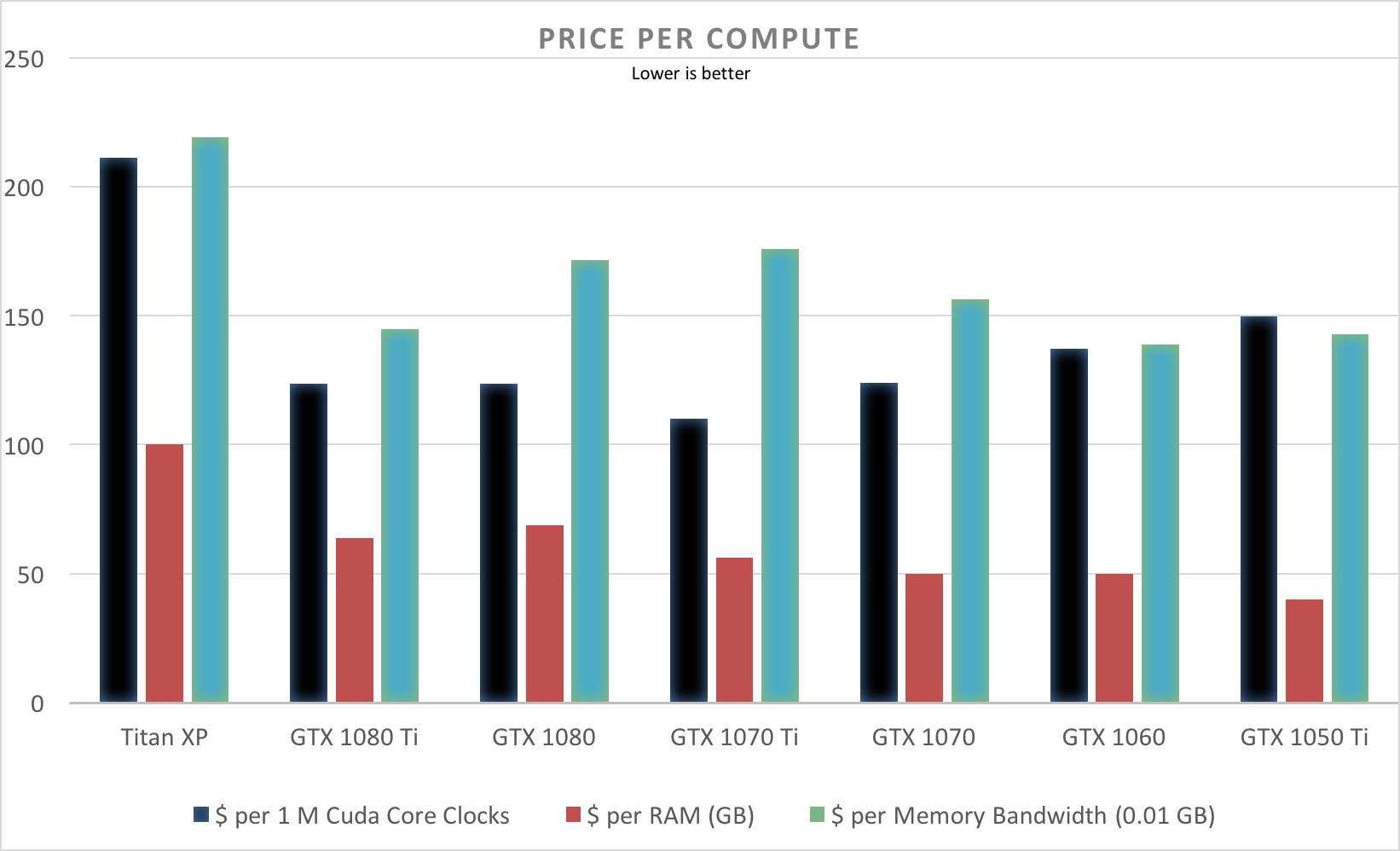

Titan x gpu deep learning. TITAN X is NVIDIA’s new flagship GeForce gaming GPU, but it’s also uniquely suited for deep learning NVIDIA gave a sneak peek of TITAN X two weeks ago at the Game Developers Conference, in San Francisco, where it drove a stunning virtual reality experience called “Thief in the Shadows,” based on the dragon Smaug, from “The Hobbit”. Best GPU overall (by a small margin) Titan Xp Cost efficient but expensive GTX 1080 Ti, GTX 1070, GTX 1080 Cost efficient and cheap GTX 1060 (6GB) I work with data sets > 250GB GTX Titan X. At the GPU Conference, Nvidia CEO JenHsun Huang unveils Titan X GPU, deeplearning development tools, a board for auto makers and details on upcoming Pascal architecture.

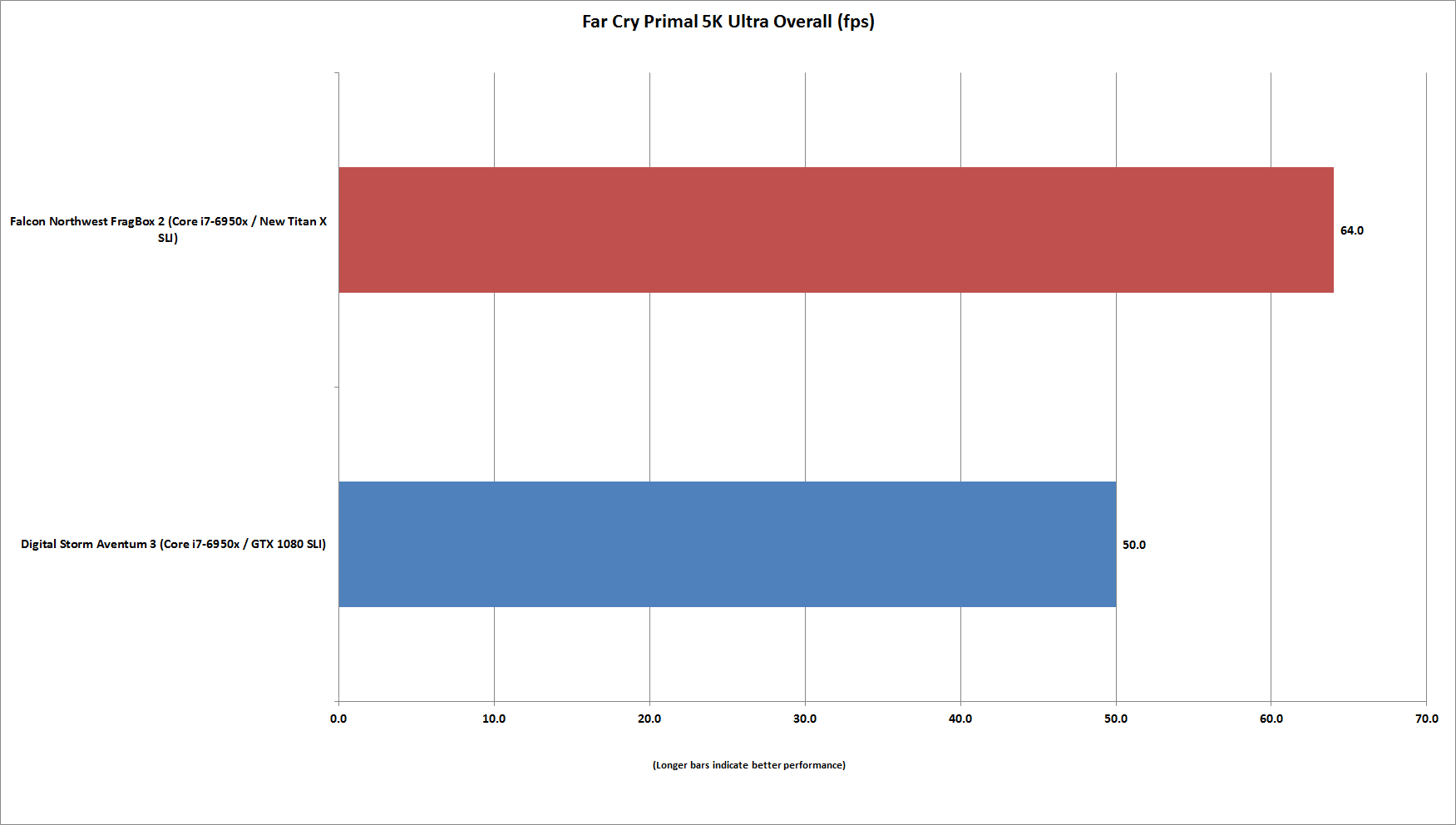

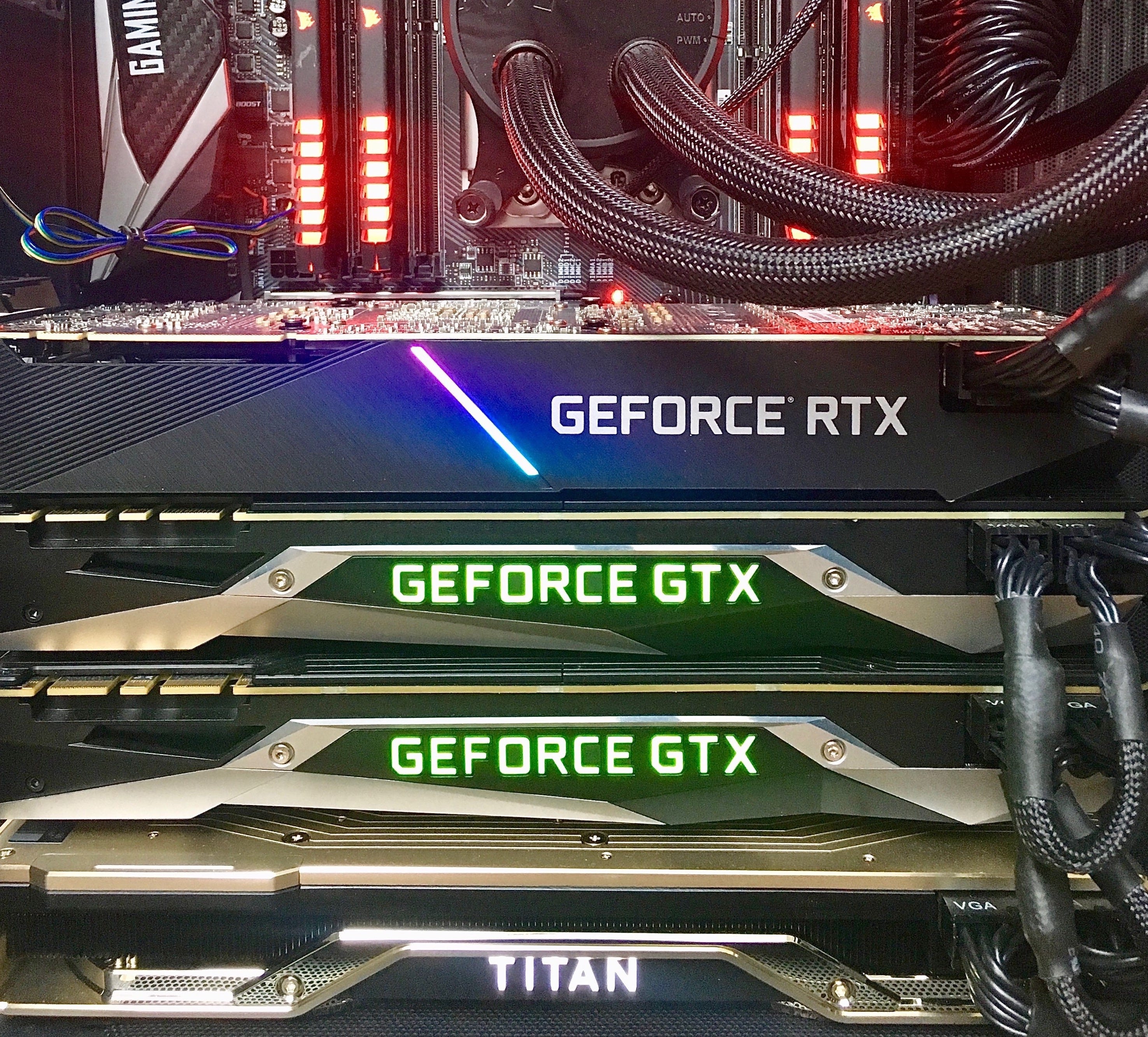

This is true when looking at 2 x RTX 80TI in comparison to a Titan RTX and 2 x Titan RTX compared to a Tesla V 100. Deep Learning performance scaling with Multi GPUs scales well for at least up to 4 GPUs 2 GPUs can often easily outperform the next more powerful GPU in regards of price and performance!. Deep Learning Workstation PC with GTX Titan Vs Server with NVIDIA Tesla V100 Vs Cloud Instance Selection of Workstation for Deep learning GPU GPU’s are the heart of Deep learning Computation involved in Deep Learning are Matrix operations running in parallel operations Best GPU overall NVidia Titan Xp, GTX Titan X (Maxwell.

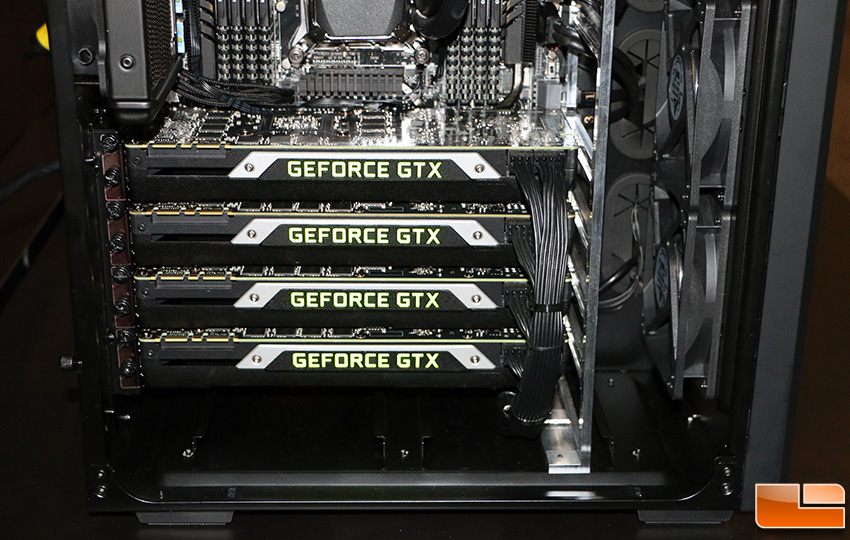

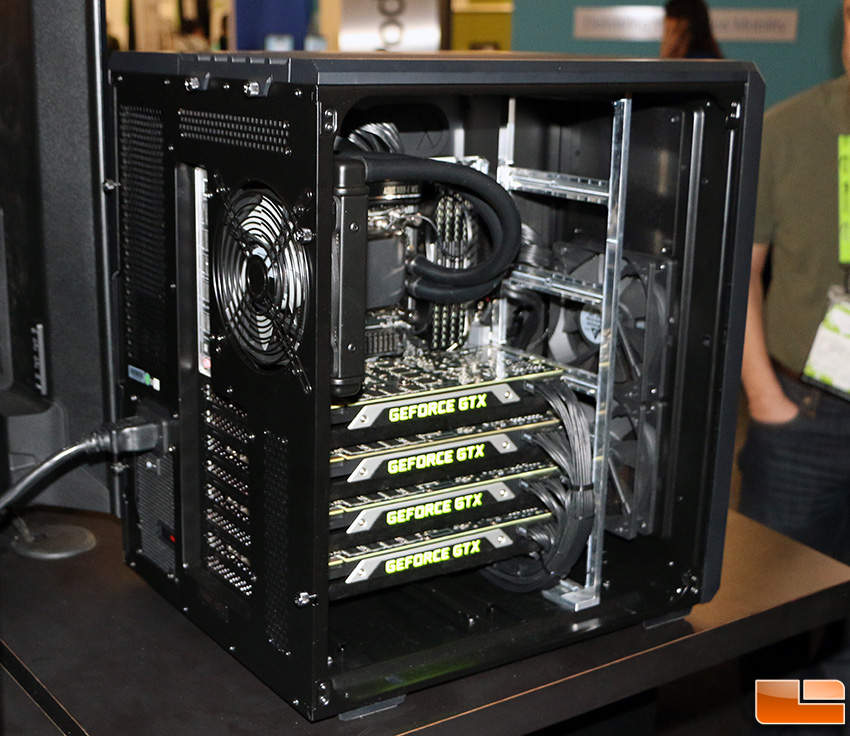

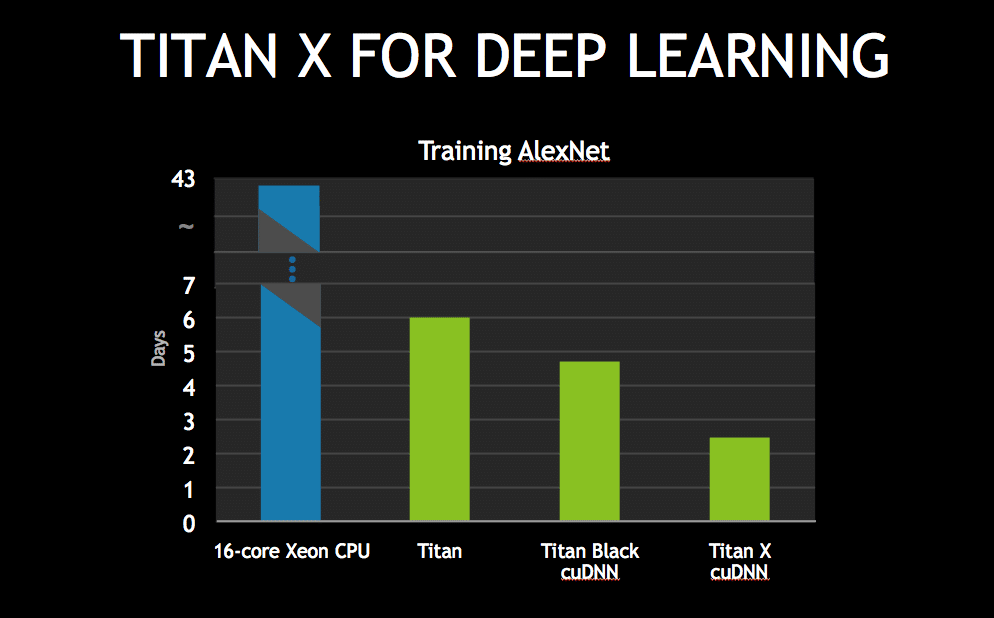

For this experiment, I trained on a single Titan X GPU on my NVIDIA DevBox Each epoch took ~63 seconds with a total training time of 74m10s I then executed the following command to train with all four of my Titan X GPUs $ python trainpy output multi_gpupng gpus 4 INFO loading CIFAR10 data INFO training with 4 GPUs. Best performing GPU for Deep Learning models Socalled passively cooled GPU accelerators are no exception in the data center segment The term “passive cooling” is only applicable to the extent that the cards as such do not use fans, but the cooler has a constant air flow through it. There’s been much industry debate over which NVIDIA GPU card is bestsuited for deep learning and machine learning applications At GTC 15, NVIDIA CEO and cofounder JenHsun Huang announced the release of the GeForce Titan X, touting it as “the most powerful processor ever built for training deep neural networks”Within months, NVIDIA proclaimed the Tesla K80 is the ideal choice for.

It’s not only a multicore CPU monster with new Intel Deep Learning Boost (Intel DL Boost), but can double as a titan of GPUcentric workloads as well All thanks to the latest, cuttingedge workstation CPU technology from Intel On the new Xeon W they added more pipes!. RTX 80 Ti is the best GPU for Machine Learning / Deep Learning if 11 GB of GPU memory is sufficient for your training needs (for many people, it is) The 80 Ti offers the best price/performance among the Titan RTX, Tesla V100, Titan V, GTX 1080 Ti, and Titan Xp. Specifically, there are now 64 dedicated PCIe lanes.

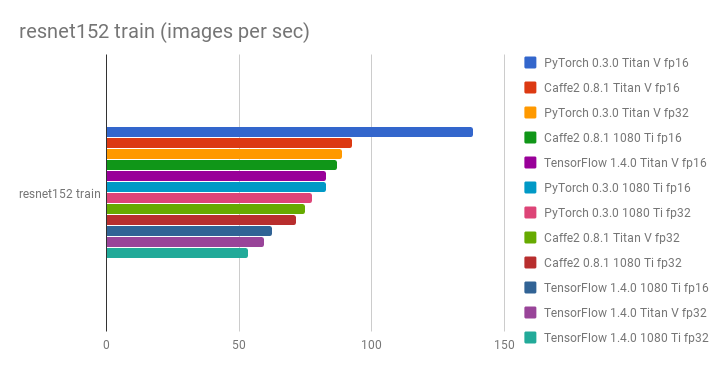

There are two identical Nvidia GTX TITAN X on the board I can use these cards as usual However, when I run any Deep Learning programs (ex caffe, tensorflow, matconvnet) The system went crash and become black screen The output of command “nvidiasmi” as followed Unable to determine the device handle for GPU GPU is lost. The K40, K80, M40, and M60 are old GPUs and have been discontinued since 16 Here’s an update for April 19 I’ll quickly answer the original question before moving onto the GPUs of 19 From fastest to slowest M60 > K80 > M40 > K40 These GP. In this post I'll be looking at some common Machine Learning (Deep Learning) application job runs on a system with 4 Titan V GPU's I will repeat the job runs at PCIe X16 and X8 I looked at this issue a couple of years ago and wrote it up in this post, PCIe X16 vs X8 for GPUs when running cuDNN and Caffe.

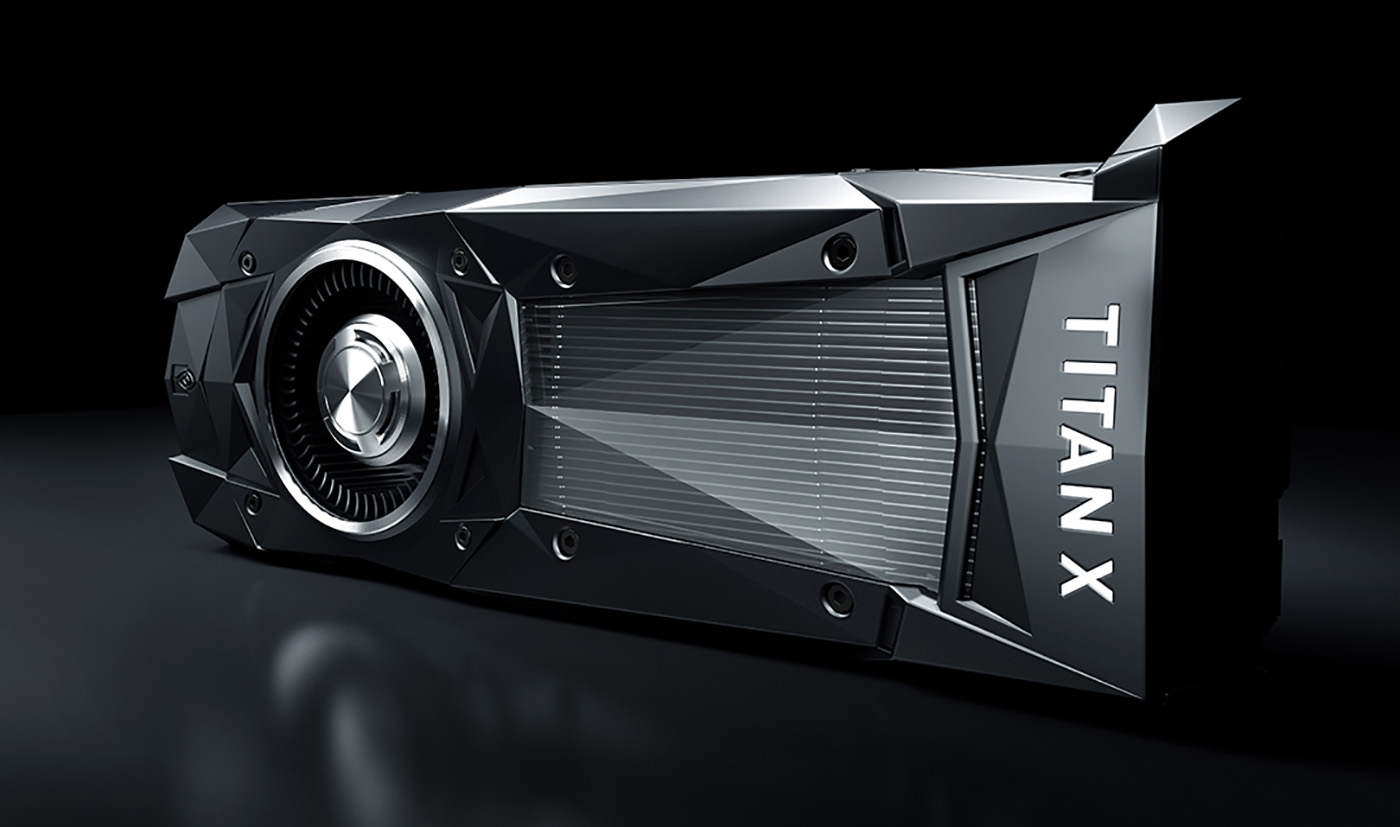

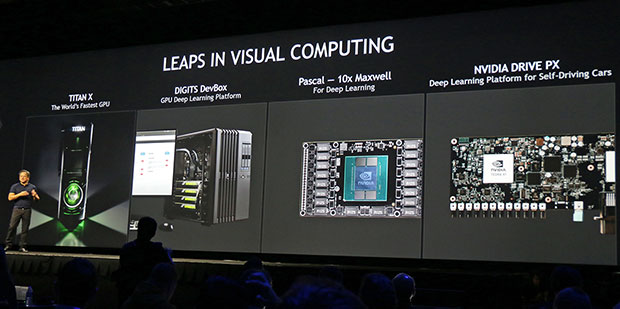

With the Titan X, it took ~5 seconds with a single GPU Since I’m experimenting with Deep Learning heavily, I decided to go for the second Titan X as an investment With the 2 x Titan X setup, I. The new TITAN X is Nvidia’s fourth GeForce GPU based on 16nm Pascal architecture It is much faster than the GTX 1080 – formerly the world’s fastest video card The TITAN X is premiumpriced starting at $10, $0 more than the Maxwell TITAN X, and it is only available directly from Nvidia Besides being the world’s fastest video card, the Pascal TITAN X is also a hybrid card like the Maxwell version that are wellsuited for Single Precision (SP) and Deep Learning compute programs. TITAN X is Nvidia’s new flagship GeForce gaming GPU, but it’s also uniquely suited for deep learning NVIDIA CEO and cofounder JenHsun Huang showcased three new technologies that will fuel deep learning during his opening keynote address to the 4,000 attendees of the GPU Technology Conference.

If you want to train without a GPU (something that is not recommended) or use multiple GPUs, you can do so by adjusting the ExecutionEnvironment parameter in trainingOptions In our tests, using an NVIDIA Titan X GPU was 30 times faster than using a CPU (Intel Xeon Ev4) to train a Faster RCNN vehicle detector. The decidedly impressive result is the new NVIDIA TITAN X with Pascal™ NVIDIA TITAN X with Pascal When tackling deep learning challenges, nothing short of ultimate performance will get the job done The latest Titan X is the first GPU to consider The Titan X with Pascal is built atop NVIDIA’s new upsized GP102 architecture. Deep Learning Benchmarks Comparison 19 RTX 80 Ti vs TITAN RTX vs RTX 6000 vs RTX 8000 Selecting the Right GPU for your Needs Read the post » Advantages of OnPremises Deep Learning and the Hidden Costs of Cloud Computing.

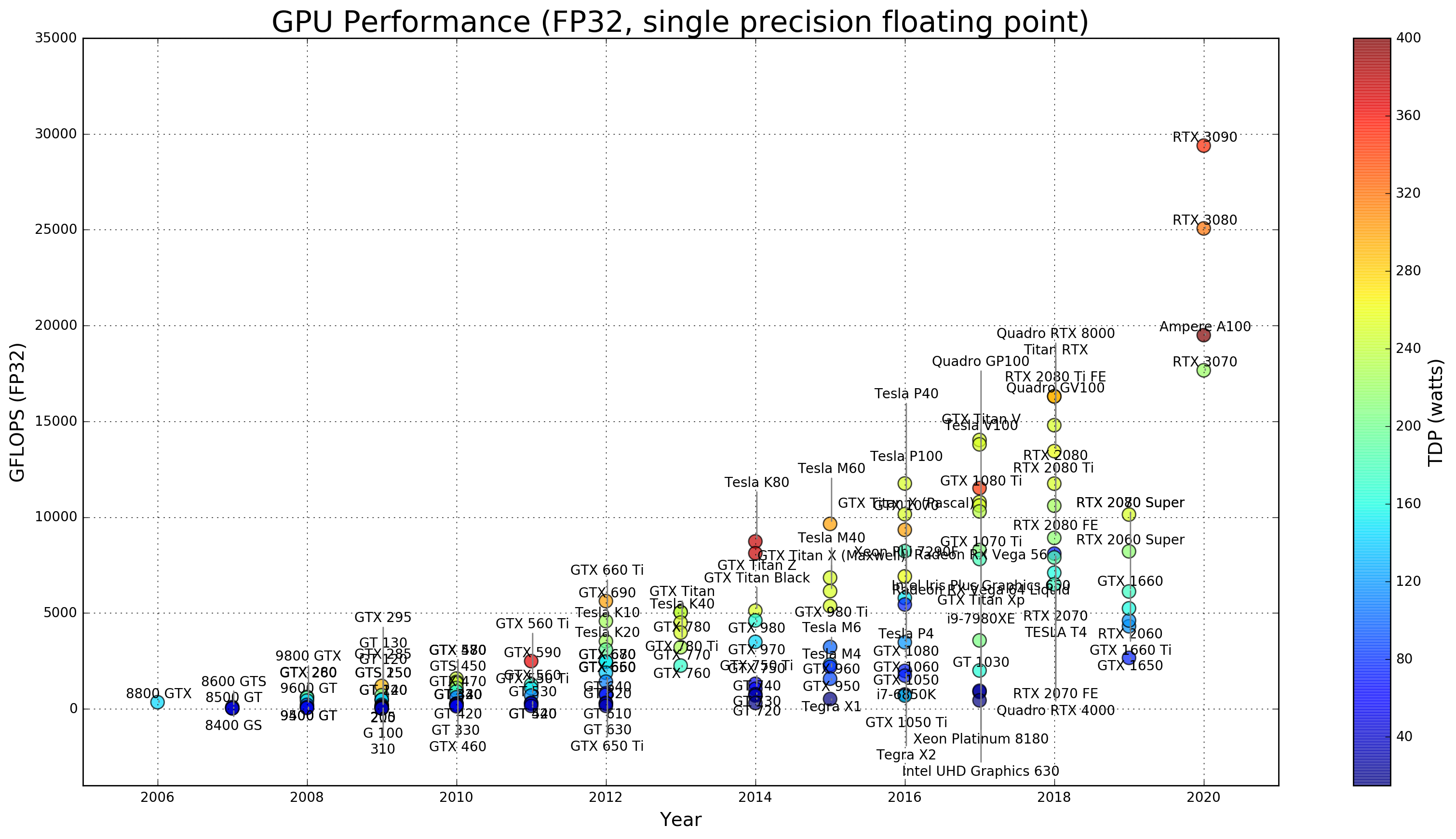

That’s 10 times more powerful than the Titan X from last year, making this a huge step up in processing power for deep learning and cryptocurrency mining It comes with 5,1 CUDA cores for traditional GPU computing, as well as 640 Tensor cores for deep learning, with both types operating at 1,0 MHz They can also be overclocked to 1,455 MHz. In our tests, using an NVIDIA Titan X GPU was 30 times faster than using a CPU (Intel Xeon Ev4) to train a Faster RCNN vehicle detector On average the CPU took a little over 600 seconds while training with a GPU took just seconds detector = trainFasterRCNNObjectDetector(trainingData, layers, options,. Bizon Z5000 Liquid cooled NVIDIA TITAN RTX Deep Learning GPU Rendering 4 x GPU Deep Learning, Rendering Workstation with FULL Liquid Cooling FULL CUSTOM WATER COOLING FOR CPU AND GPU Whisperquiet (40db).

Nvidia is updating its Deep Learning GPU Training System, or DIGITS for short, with automatic scaling across multiple GPUs within a single node between the GPUs would allow for Pascal GPUs to offer at least a 10X improvement in performance over the Maxwell GPUs used in the Titan X. TITAN X is Nvidia’s new flagship GeForce gaming GPU, but it’s also uniquely suited for deep learning NVIDIA CEO and cofounder JenHsun Huang showcased three new technologies that will fuel deep learning during his opening keynote address to the 4,000 attendees of the GPU Technology Conference. Nvidia's monstrous new Titan X graphics card stomps onto the scene, powered by Pascal Nvidia’s focusing more on the graphics card’s use for professional deeplearning AI applications The.

The performance and performance/watt of Intel Stratix 10 FPGA and Titan X GPU for ResNet50 is shown in Figure 4B Even for the conservative performance estimate, Intel Stratix 10 FPGA is already ~60% better than achieved Titan X GPU performance The moderate and aggressive estimates are even better (ie, 21x and 35x speedups). Deep Learning performance scaling with Multi GPUs scales well for at least up to 4 GPUs 2 GPUs can often easily outperform the next more powerful GPU in regards of price and performance!. There are two identical Nvidia GTX TITAN X on the board I can use these cards as usual However, when I run any Deep Learning programs (ex caffe, tensorflow, matconvnet) The system went crash and become black screen The output of command “nvidiasmi” as followed Unable to determine the device handle for GPU GPU is lost.

With Titan X, Nvidia is pushing hard into "deep learning," a flavor of artificial intelligence development that the company believes will be "an engine of computing innovation for areas as diverse. TitanXp vs GTX1080Ti for Machine Learning NVIDIA has released the Titan Xp which is an update to the Titan X Pascal (they both use the Pascal GPU core) They also recently released the GTX1080Ti which proved to be every bit as good at the Titan X Pascal but at a much lower price The new Titan Xp does offer better performance and is currently their fastest GeForce card. Nvidia is updating its Deep Learning GPU Training System, or DIGITS for short, with automatic scaling across multiple GPUs within a single node between the GPUs would allow for Pascal GPUs to offer at least a 10X improvement in performance over the Maxwell GPUs used in the Titan X.

The performance and performance/watt of Intel Stratix 10 FPGA and Titan X GPU for ResNet50 is shown in Figure 4B Even for the conservative performance estimate, Intel Stratix 10 FPGA is already ~60% better than achieved Titan X GPU performance The moderate and aggressive estimates are even better (ie, 21x and 35x speedups). Nvidia Titan V GPU for Deep Learning / AI (OSX ) Notifications Clear all I contemplating options for adding GPU to my Macbook primarily for Deep Learning I did a bit of research on enclosures but I am having mixed feedback, limited driver support etc It seems Titax V is the best out there, but I also see above that it. Deep Learning AZ 🔗 Ingredient List Lenovo X1 Carbon 5th gen (Supports Thunderbolt 3) Akitio Node (External GPU case to mount TITAN X in Supports Thunderbolt 3) Ubuntu 1604 (64bit) Nvidia GeForce GTX TITAN X graphics card NVIDIALinuxx86_64–run.

GPU is lost when running Deep Learning codes on Ubuntu1604 with two GTX TITAN X dongchangliu May 6, , 531pm #1 Hi, there I’m running Ubuntu1604 on a HP Z640 workstation There are two identical Nvidia GTX TITAN X on the board I can use these cards as usual However, when I run any Deep Learning programs (ex caffe, tensorflow, matconvnet). TITAN X is Nvidia’s new flagship GeForce gaming GPU, but it’s also uniquely suited for deep learning NVIDIA CEO and cofounder JenHsun Huang showcased three new technologies that will fuel deep learning during his opening keynote address to the 4,000 attendees of the GPU Technology Conference. The decidedly impressive result is the new NVIDIA TITAN X with Pascal™ NVIDIA TITAN X with Pascal When tackling deep learning challenges, nothing short of ultimate performance will get the job done The latest Titan X is the first GPU to consider The Titan X with Pascal is built atop NVIDIA’s new upsized GP102 architecture.

TITAN RTX powers AI, machine learning, and creative workflows The most demanding users need the best tools TITAN RTX is built on NVIDIA’s Turing GPU architecture and includes the latest Tensor Core and RT Core technology for accelerating AI and ray tracing. Do not go for GeForce series GPUs for algorithms They are not designed for them They are designed primarily for gaming, and that is what they should be used for P100 is Tesla series and will be more suitable for your Deep Learning experiments. The performance and performance/watt of Intel Stratix 10 FPGA and Titan X GPU for ResNet50 is shown in Figure 4B Even for the conservative performance estimate, Intel Stratix 10 FPGA is already ~60% better than achieved Titan X GPU performance The moderate and aggressive estimates are even better (ie, 21x and 35x speedups).

Specifically, there are now 64 dedicated PCIe lanes. SabrePC GPU Deep Learning Workstations are outfitted with the latest NVIDIA GPUs Each system ships preloaded with some of the most popular deep learning applications SabrePC Deep Learning Systems are fully turnkey, pass rigorous testing and validation, and are built to perform out of the box. Deep Learning on Tap NVIDIA Engineer Turns to AI, GPU to Invent New Brew Full Nerd #1 is a light, refreshing blonde ale created by a homebrewing engineer and AI powered by an NVIDIA TITAN GPU August 6, by Brian Caulfield Some dream of code.

TITAN X is our new flagship GeForce gaming GPU, but it’s also uniquely suited for deep learning We gave a sneak peek of TITAN X two weeks ago at the Game Developers Conference, in San Francisco, where it drove a stunning virtual reality experience called “Thief in the Shadows,” based on the dragon Smaug, from “The Hobbit”. In this post I'll be looking at some common Machine Learning (Deep Learning) application job runs on a system with 4 Titan V GPU's I will repeat the job runs at PCIe X16 and X8 I looked at this issue a couple of years ago and wrote it up in this post, PCIe X16 vs X8 for GPUs when running cuDNN and Caffe. It’s not only a multicore CPU monster with new Intel Deep Learning Boost (Intel DL Boost), but can double as a titan of GPUcentric workloads as well All thanks to the latest, cuttingedge workstation CPU technology from Intel On the new Xeon W they added more pipes!.

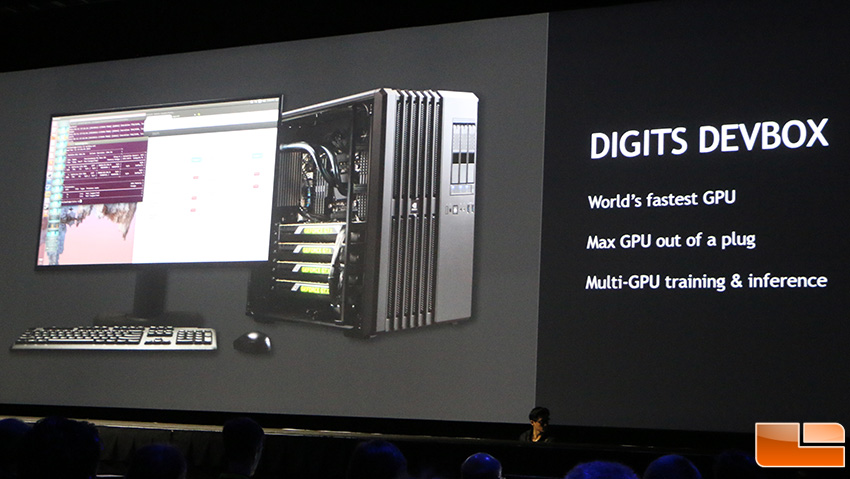

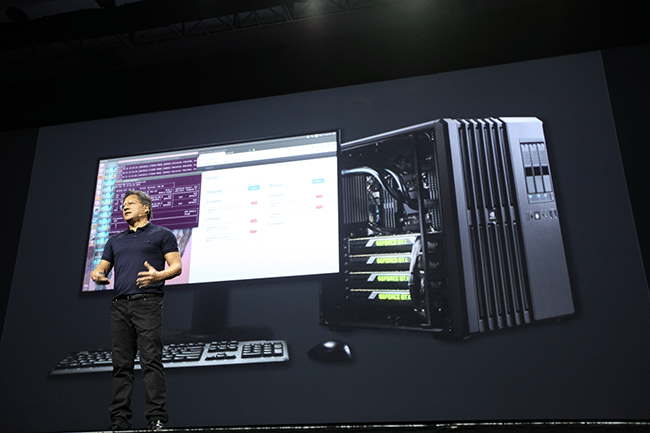

The Digits DevBox is comprised of both Digits software and a quartet of Titan X GPUs not to mention several popular deep learning frameworks altogether of which promises up to four times. NVIDIA Deep Learning GPUs provide high processing power for training deep learning models This article provides a review of three top NVIDIA GPUs—NVIDIA Tesla V100, GeForce RTX 80 Ti, and NVIDIA Titan RTX An NVIDIA Deep Learning GPU is typically used in combination with the NVIDIA Deep Learning SDK, called NVIDIA CUDAX AI This SDK is. For deep learning, the RTX 3090 is the best value GPU on the market and substantially reduces the cost of an AI workstation Interested in getting faster results?.

The NVIDIA Tesla V100 is a Tensor Core enabled GPU that was designed for machine learning, deep learning, and high performance computing (HPC) It is powered by NVIDIA Volta technology, which supports tensor core technology, specialized for accelerating common tensor operations in deep learning. RTX 80 Ti is an excellent GPU for deep learning and offer the best performance/price The main limitation is the VRAM size Training on RTX 80 Ti will require small batch sizes and in some cases, you will not be able to train large models Using NVLINk will not combine the VRAM of multiple GPUs, unlike TITANs or Quadro. The new DIGITS Deep Learning GPU Training System software is an "allinone graphical system for designing, training, and validating deep neural networks for image classification," Nvidia said The DIGITS DevBox is the "world's fastest deskside deep learning machine" with four Titan X GPUs at the heart of a platform designed to accelerate deep.

My Deep Learning computer with 4 GPUs — one Titan RTX, two 1080 Ti and one 80 Ti The Titan RTX must be mounted on the bottom because the fan is not blower style. 290% (Titan Xp) and 115% (GTX Titan X) Accordingly, the most signi˝cant contributions of this paper are the followingV Novel deep learning network, implemented using recurrent neural blocks (LSTMs), which takes the sequence of PTX assembly code of a GPU kernel and encodes it into a latent space representation that characterizes how. That’s 10 times more powerful than the Titan X from last year, making this a huge step up in processing power for deep learning and cryptocurrency mining It comes with 5,1 CUDA cores for traditional GPU computing, as well as 640 Tensor cores for deep learning, with both types operating at 1,0 MHz They can also be overclocked to 1,455 MHz.

NVIDIA RTX 80 Ti is the best GPU for Deep Learning from a priceperformance perspective in 19 The 80 Ti is ~80% as fast as the V100 for singleprecision training of neural nets, % as fast with halfprecision, and Continue Reading. TITAN RTX is built on NVIDIA’s Turing GPU architecture and includes the latest Tensor Core and RT Core technology for accelerating AI and ray tracing It’s also supported by NVIDIA drivers and SDKs so that developers, researchers, and creators can work faster and deliver better results. Deep Learning on Tap NVIDIA Engineer Turns to AI, GPU to Invent New Brew Full Nerd #1 is a light, refreshing blonde ale created by a homebrewing engineer and AI powered by an NVIDIA TITAN GPU August 6, by Brian Caulfield Some dream of code.

The new DIGITS Deep Learning GPU Training System software is an "allinone graphical system for designing, training, and validating deep neural networks for image classification," Nvidia said The DIGITS DevBox is the "world's fastest deskside deep learning machine" with four Titan X GPUs at the heart of a platform designed to accelerate deep. TITAN RTX powers AI, machine learning, and creative workflows The most demanding users need the best tools TITAN RTX is built on NVIDIA’s Turing GPU architecture and includes the latest Tensor Core and RT Core technology for accelerating AI and ray tracing. Nvidia Titan V GPU for Deep Learning / AI (OSX ) Notifications Clear all I contemplating options for adding GPU to my Macbook primarily for Deep Learning I did a bit of research on enclosures but I am having mixed feedback, limited driver support etc It seems Titax V is the best out there, but I also see above that it.

This is true when looking at 2 x RTX 80TI in comparison to a Titan RTX and 2 x Titan RTX compared to a Tesla V 100.

Rtx 60 Vs Gtx 1080ti Deep Learning Benchmarks Cheapest Rtx Card Vs Most Expensive Gtx Card By Eric Perbos Brinck Towards Data Science

Titanxp Vs Gtx1080ti For Machine Learning

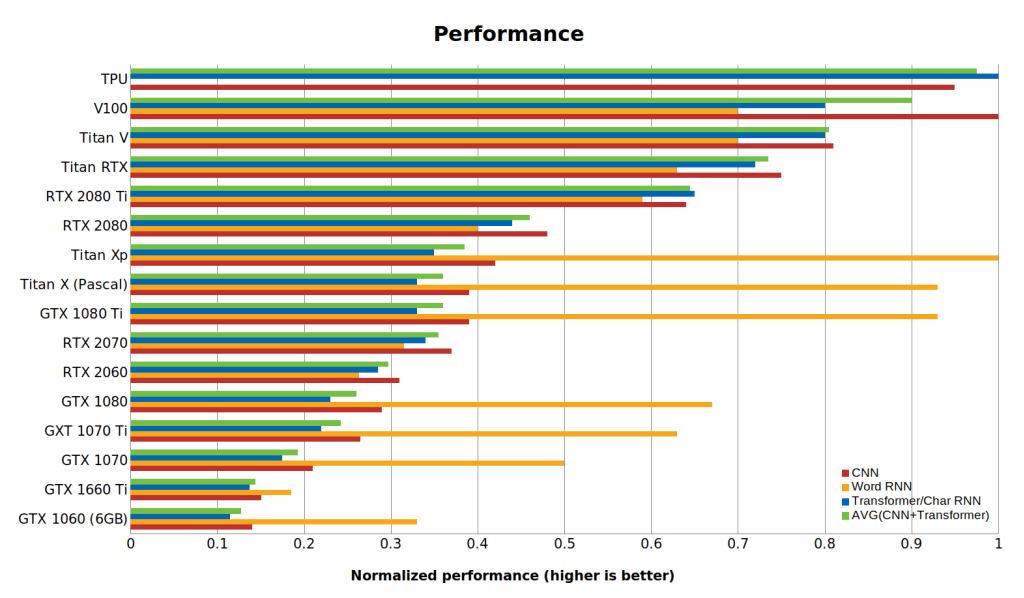

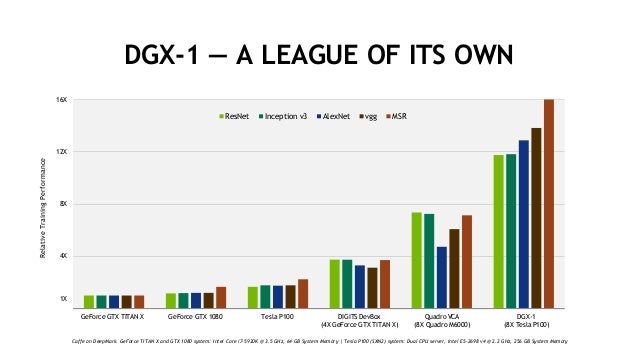

Hardware For Deep Learning Part 3 Gpu By Grigory Sapunov Intento

Titan X Gpu Deep Learning のギャラリー

Nvidia Digits Devbox Promotes Deep Learning W Titan X Legit Reviews

Picking A Gpu For Deep Learning Buyer S Guide In 19 By Slav Ivanov Slav

Which Deep Learning Gpu Is Good For Beginners Gtx 1080ti Rtx 80ti Titan V Titan Rtx Tesla V100 Titan Xp Quora

Gpu Gpu Performance 및 Titan V Rtx 80 Ti Benchmark

The Latest Deep Learning Challenge By Nvidia Titan X

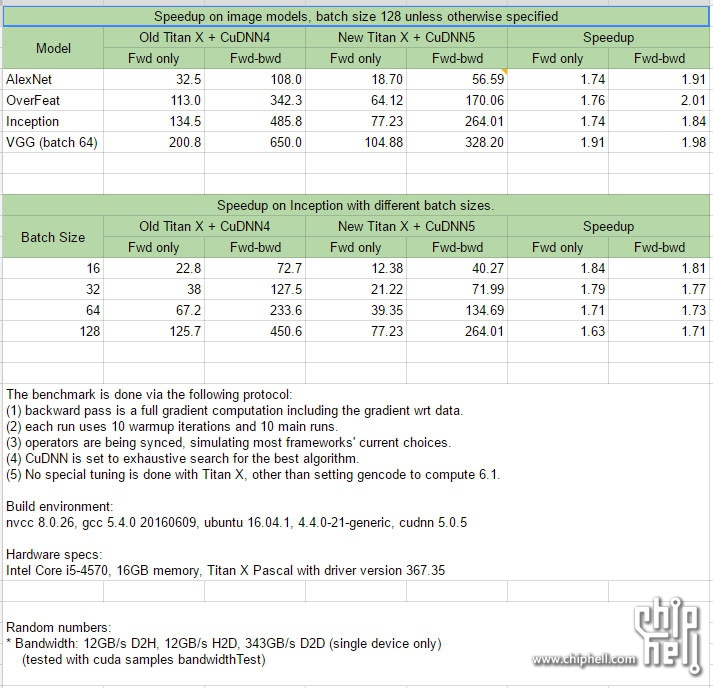

First Synthetic Benchmarks Of Nvidia Titan X Pascal Hit The Web Videocardz Com

Nvidia Unveils New Gtx Titan X 11 Teraflops 12gb Gddr5x Just 1 0 Ars Technica

Nvidia Gtx Titan X Pascal Review Vs Gtx 1080 Sli 1070s Gamersnexus Gaming Pc Builds Hardware Benchmarks

Nvidia Titan X Single Precision Is More Important

Nvidia Deep Dives Into Deep Learning With Pascal Titan X Gpus Zdnet

Bizon G3000 2 Gpu 4 Gpu Deep Learning Workstation Pc Best Deep Learning Computer 19 21

Tensorflow Performance With 1 4 Gpus Rtx Titan 80ti 80 70 Gtx 1660ti 1070 1080ti And Titan V

Nvidia S Monstrous Titan X Pascal Gpu Stomps Onto The Scene Pcworld

Deepbench Training Gemm Rnn The Nvidia Titan V Deep Learning Deep Dive It S All About The Tensor Cores

Bizon Z5000 Liquid Cooled Nvidia Rtx 3090 3080 80 Ti Titan Rtx Deep Learning And Gpu Rendering Workstation Pc 4 Gpu 7 Gpu Up To 18 Cores

Nestlogic

The Best Gpus For Deep Learning In An In Depth Analysis

Machine Learning Deep Learning Archives Kompulsa

Nvidia Digits Devbox Promotes Deep Learning W Titan X Legit Reviews

Deep Learning Gpu Benchmarks Tesla V100 Vs Rtx 80 Ti Vs Gtx 1080 Ti Vs Titan V

Just Got An 8x Titan V Beast For Deep Learning Research Beautiful Pcmasterrace

Best Gpu For Deep Learning In Rtx 80 Ti Vs Titan Rtx Vs Rtx 6000 Vs Rtx 8000 Benchmarks Bizon Custom Workstation Computers Best Workstation Pcs For Ai Deep Learning

Original Nvidia Gtx Titan X Titan X 12gb Ddr5 High End Gaming Deep Learning Graphics 4k Graphics Graphics Cards Aliexpress

Review Nvidia Titan X Pascal Graphics Hexus Net

Nvidia Gtx Titan X Pascal Review Vs Gtx 1080 Sli 1070s Gamersnexus Gaming Pc Builds Hardware Benchmarks

The Battle Of The Titans Pascal Titan X Vs Maxwell Titan X Babeltechreviews

Best Deep Learning Performance By An Nvidia Gpu Card The Winner Is Amax Blog And Insights

Best Deep Learning Performance By An Nvidia Gpu Card The Winner Is Amax Blog And Insights

Deep Learning Benchmarks Comparison 19 Rtx 80 Ti Vs Titan Rtx Vs Rtx 6000 Vs Rtx 8000 Selecting The Right Gpu For Your Needs Exxact

Can Fpgas Beat Gpus In Accelerating Next Generation Deep Learning

Can Fpgas Beat Gpus In Accelerating Next Generation Deep Learning

Nvidia Deep Dives Into Deep Learning With Pascal Titan X Gpus Zdnet

Nvidia Ceo Talks Titan X Next Gen Pascal Deep Learning And Elon Musk At Gtc 15 Hothardware

What Is Currently The Best Gpu For Deep Learning Quora

Nvidia Ceo Talks Titan X Next Gen Pascal Deep Learning And Elon Musk At Gtc 15 Hothardware

Choose Proper Geforce Gpu S According To Your Machine Deep Learning Garden

Deep Learning And Nvidia Titan X Digits Devbox Nvidia Blog

Titan Rtx Data Science Kit Nvidia

Nvidia Unveils New Titan X Graphics Card Ai Trends

Picking A Gpu For Deep Learning Buyer S Guide In 19 By Slav Ivanov Slav

Nvidia Announces New Nvidia Titan X 1 0 12gb Of Gddr5x Shipping August 2 Extremetech

Nvidia Titanx 12g Pascal Titan X Pascal Scientific Computing Deep Learning Game Rendering Graphics Card Graphics Cards Aliexpress

Nvidia Titan Rtx Accelerate Data Science On Your Pc Maingear

Nvidia Titanx 12g Pascal Titan X Pascal Scientific Computing Deep Learning Game Rendering Graphics Card Mega Promo 0edf Cicig

The Best Gpus For Deep Learning In An In Depth Analysis

Why Your Personal Deep Learning Computer Can Be Faster Than Aws And Gcp By Jeff Chen Mission Org Medium

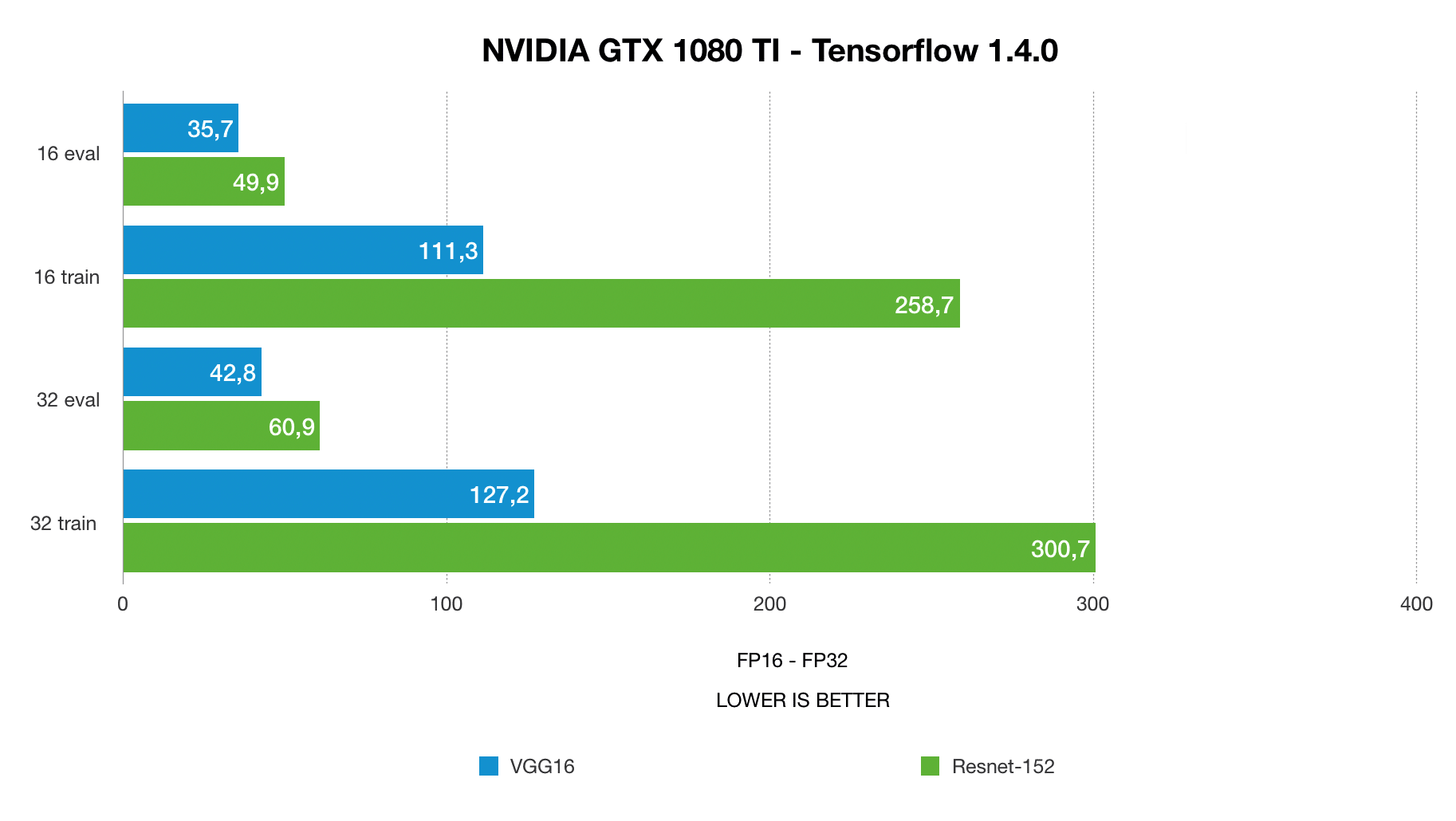

Github U39kun Deep Learning Benchmark Deep Learning Benchmark For Comparing The Performance Of Dl Frameworks Gpus And Single Vs Half Precision

Nvidia S Monstrous Titan X Pascal Gpu Stomps Onto The Scene Pcworld

Why Your Personal Deep Learning Computer Can Be Faster Than Aws And Gcp By Jeff Chen Mission Org Medium

Original Nvidia Gtx Titan X Titan X 12gb Ddr5 High End Gaming Deep Learning Graphics 4k Graphics Graphics Cards Aliexpress

Nvidia Geforce Gtx Titan X Review Pcmag

Geforce Gtx Titan X Graphics Card Geforce

Official Gtx Titan X Specs 1000 Price Unveiled At Gtc 15 Gamersnexus Gaming Pc Builds Hardware Benchmarks

Titan Rtx Ultimate Pc Graphics Card With Turing Nvidia

Nivida S New Gpu Titan Rtx Has Monster Power For Deep Learning

Lambda Deep Learning Devbox With Nvidia Digits 4x Nvidia Gtx Titan X 12gb Gpus Preinstalled With Ubuntu 14 04 Lts Cuda Caffe Torch And Cudnn Innoculous Com

Deep Learning And Nvidia Titan X Digits Devbox Nvidia Blog

Nvidia Digits Devbox Promotes Deep Learning W Titan X Legit Reviews

Nvidia Deep Learning Solutions Alex Sabatier

The Nvidia Titan V Deep Learning Deep Dive It S All About The Tensor Cores

Fully Utilizing Your Deep Learning Gpus By Colin Shaw Medium

Nvidia Gtx Titan X 12gb In The Mac Pro 5 1 2 Titan X Gpus In One Mac Pro Most Powerful Cuda Graphics Card Ever The Ultimate Mac Pro Community

Amazon Com Evga Geforce Gtx Titan X 12gb Gaming Play 4k With Ease Graphics Card 12g P4 2990 Kr Computers Accessories

Titan V Deep Learning Benchmarks With Tensorflow In 19

Rtx Titan Tensorflow Performance With 1 2 Gpus Comparison With Gtx 1080ti Rtx 70 80 80ti And Titan V

Titan Rtx Deep Learning Benchmarks

Best Deep Learning Performance By An Nvidia Gpu Card The Winner Is Amax Blog And Insights

Is The Titan X Better Than The 1080 Ti For Deep Learning And If So Why Quora

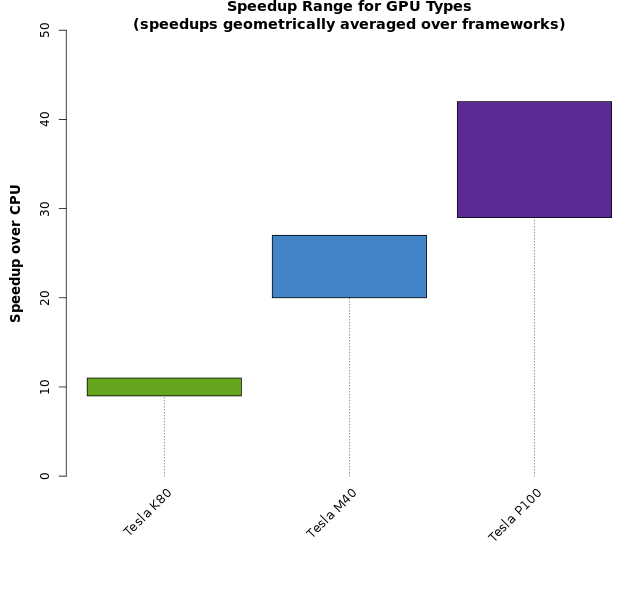

Deep Learning Benchmarks Of Nvidia Tesla P100 Pcie Tesla K80 And Tesla M40 Gpus Microway

Buy Deep Learning Devbox Intel Core I9 79x 128gb Memory 2x Nvidia Titan Xp Gpu Preinstalled Ubuntu16 04 Cuda9 Tensorflow For Machine Learning Ai 2 X Nvidia Titan Xp Gpu In Cheap

Artificial Intelligence And Robotics Pastime Discussion Forums

Hypothermia Nvidia Titan X Pascal Vs Maxwell Review

Build A Machine Learning Dev Box With Nvidia Titan X Pascal By Kunfeng Chen Medium

1080 Ti Vs Rtx 80 Ti Vs Titan Rtx Deep Learning Benchmarks With Tensorflow 18 19 Bizon Custom Workstation Computers Best Workstation Pcs For Ai Deep Learning Video Editing

Picking A Gpu For Deep Learning Buyer S Guide In 19 By Slav Ivanov Slav

Multi Gpu Scaling With Titan V And Tensorflow On A 4 Gpu Workstation

Nvidia Titan X Ultimate Graphics Card Unleashed

Performance Results Deep Learning Nvidia Titan Rtx Review Gaming Training Inferencing Pro Viz Oh My Tom S Hardware

Deep Learning Gpu Best Computer For Deep Learning Tooploox

Nvidia Geforce Gtx Titan X Pascal Graphics Card Announced

Nvidia Titan V Launched For Ai And Deep Learning First Gpu Based On Volta Architecture Technology News

Hardware For Deep Learning Part 3 Gpu By Grigory Sapunov Intento

Nvidia Digits Devbox And Deep Learning Demonstration Gtc 15 Youtube

Rtx 80 Ti Deep Learning Benchmarks With Tensorflow 19

Nvidia Deep Learning Sdk Now Available Ahmadreza Razian سید احمدرضا رضیانahmadreza Razian

Hardmaru Guide For Building The Best 4 Gpu Deep Learning Rig For 7000 T Co J9qvoexs9h

Deep Learning And Nvidia Titan X Digits Devbox Nvidia Blog

Titan Xp Graphics Card With Pascal Architecture Nvidia Geforce

Best Gpu S For Deep Learning 21 Updated Favouriteblog Com

Titan Rtx Benchmarks For Deep Learning In Tensorflow 19 Xla Fp16 Fp32 Nvlink Exxact

Deep Learning Nvidia Gtx Titan X Versus Gtx 750 Ti Youtube

Early Experiences With Deep Learning On A Laptop With Nvidia Gtx 1070 Gpu Part 1 Amund Tveit S Blog

Building A 5 000 Machine Learning Workstation With An Nvidia Titan Rtx And Ryzen Threadripper By Jeff Heaton Towards Data Science

Nvidia Geforce Gtx Titan X Pascal Graphics Card Announced

Pascal Titan X Vs The Gtx 1080 First Benchmarks Revealed Babeltechreviews

Nvidia Digits Devbox Promotes Deep Learning W Titan X Legit Reviews

Nvidia Introduces Titan V For Machine Learning Acceleration On The Pc